Action-Informed Estimation and Planning: Clearing Clutter on Staircases via Quadrupedal Pedipulation

Prasanna Sriganesh, Barath Satheeshkumar, Anushree Sabnis and Matthew Travers

Abstract

For robots to operate autonomously in densely cluttered environments, they must reason about and potentially physically interact with obstacles to clear a path. Safely clearing a path on challenging terrain, such as a cluttered staircase, requires controlled interaction. However, relying solely on exteroceptive (e.g., visual) feedback for interaction is challenging, as the robot's own body may occlude the object, and sensor measurements may be noisy. In this work, we propose an "action-informed" approach that tightly couples estimation and planning for quadrupedal robots to clear clutter on staircases via "pedipulation" (i.e., manipulation with a leg). Our action-informed estimation module uses proprioceptive (e.g., foot contact) feedback during an interaction to predict the object's displacement. This prediction serves as an action-informed prior to guide the perception system, enabling robust tracking even after partial pushes or brief occlusions. This interaction-aware state estimate is used by our motion planner to decide on subsequent actions, such as re-pushing, climbing, or treating an object as immovable. We show in hardware experiments that our interaction-aware system significantly outperforms an open-loop baseline in task success rate (e.g., 85% vs 35% on one task) and tracking accuracy, generalizing to various objects and environments.

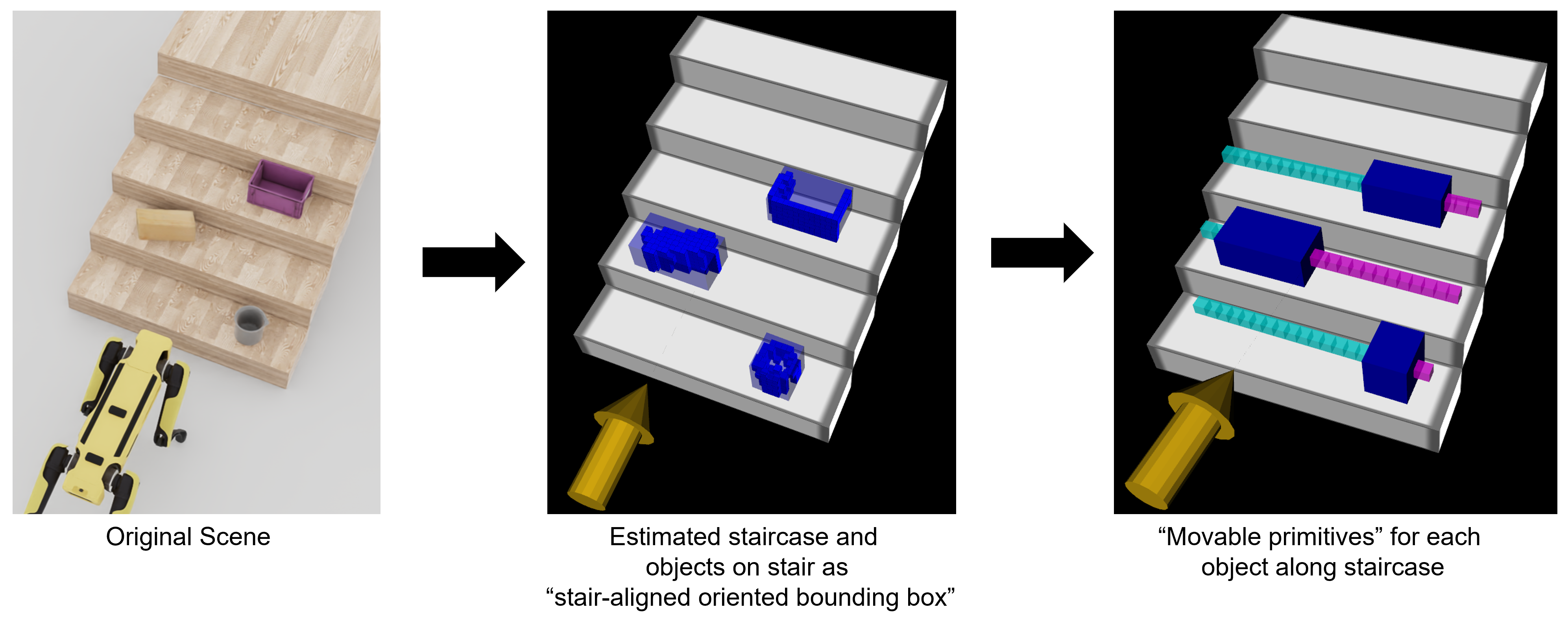

Our Approach

Results

@article{sriganesh2025actioninformed,

title={Action-Informed Estimation and Planning: Clearing Clutter on Staircases via Quadrupedal Pedipulation},

author={Prasanna Sriganesh and Barath Satheeshkumar and Anushree Sabnis and Matthew Travers},

journal={arXiv preprint arXiv:2509.20516},

year={2025}, }